You’ve seen it before. Someone pastes a prompt into ChatGPT, hits enter, and out comes a response that reads like it was pulled straight from the brain of a Pulitzer-winning columnist. Meanwhile, your own prompt—carefully worded, politely phrased—yields an answer that sounds like it was written by a tired intern on a Friday afternoon.

So what gives? How do some people seem to unlock AI’s full potential while others barely scratch the surface?

The secret often boils down to three simple words: “Act as if.”

It doesn’t look revolutionary on paper. But behind that tiny phrase lies an enormous shift in how you interact with generative AI systems—and once you learn to wield it properly, it changes everything.

Let’s unpack why it works, where it came from, and how people are quietly using it to coax jaw-droppingly accurate answers out of machines.

The Psychology of Pretending

“Act as if” is more than a clever prompt hack. It taps into how large language models like ChatGPT are designed. These systems don’t think or know things the way humans do. They predict what word is most likely to come next based on your input—and that prediction is deeply influenced by role, tone, and context.

When you say “act as if you’re a seasoned financial advisor” or “act as if you’ve been working as a trauma therapist for 15 years,” you aren’t just labeling the response. You’re shaping the persona the model simulates, and that framing primes the model to surface knowledge, structure, and tone that aligns with that identity.

In a way, it’s like method acting for machines.

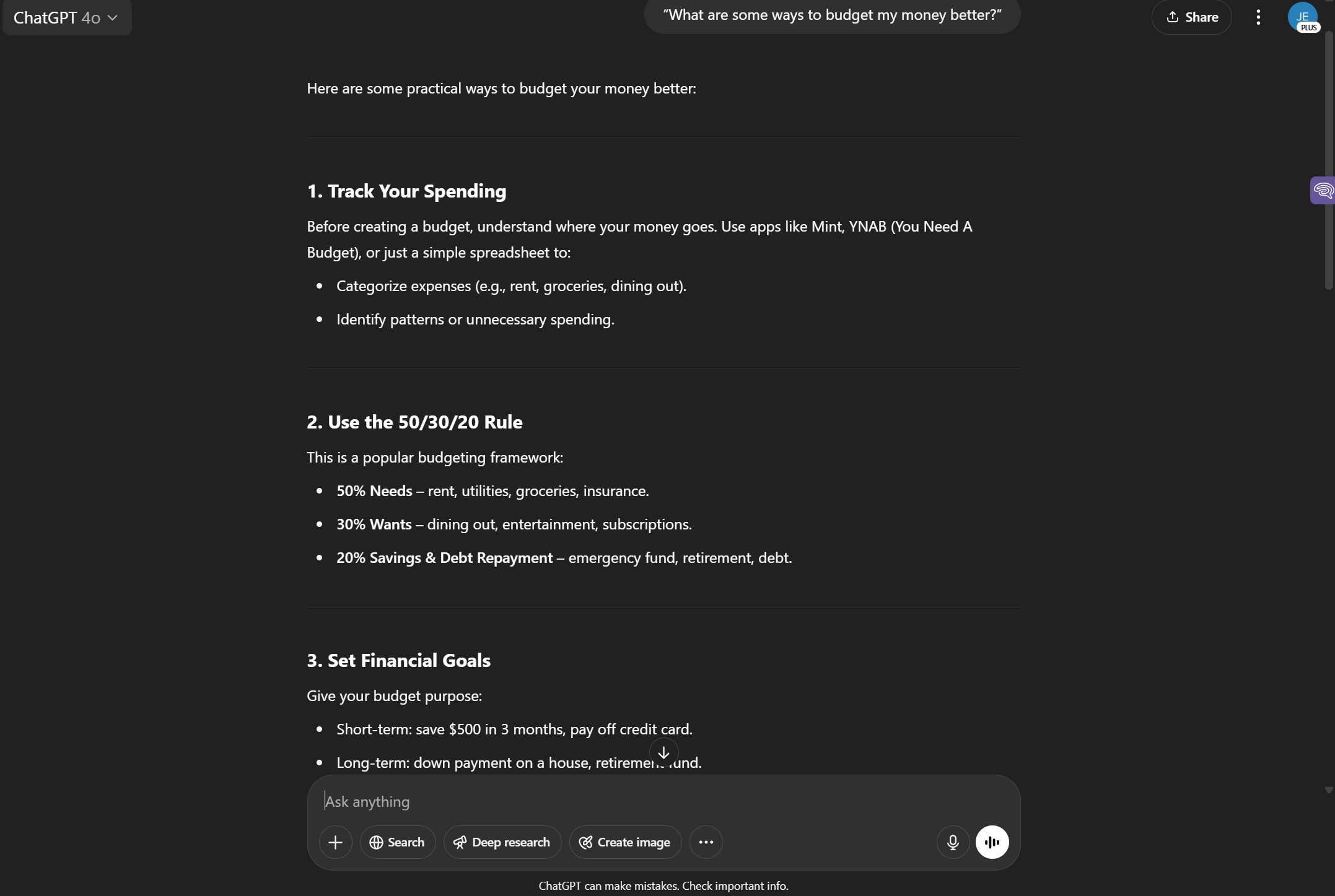

Compare these two prompts that aim to teach a user how to budget their finances:

- “What are some ways to budget my money better?”

Here are the first 3-step generic ChatGPT responses for this prompt:

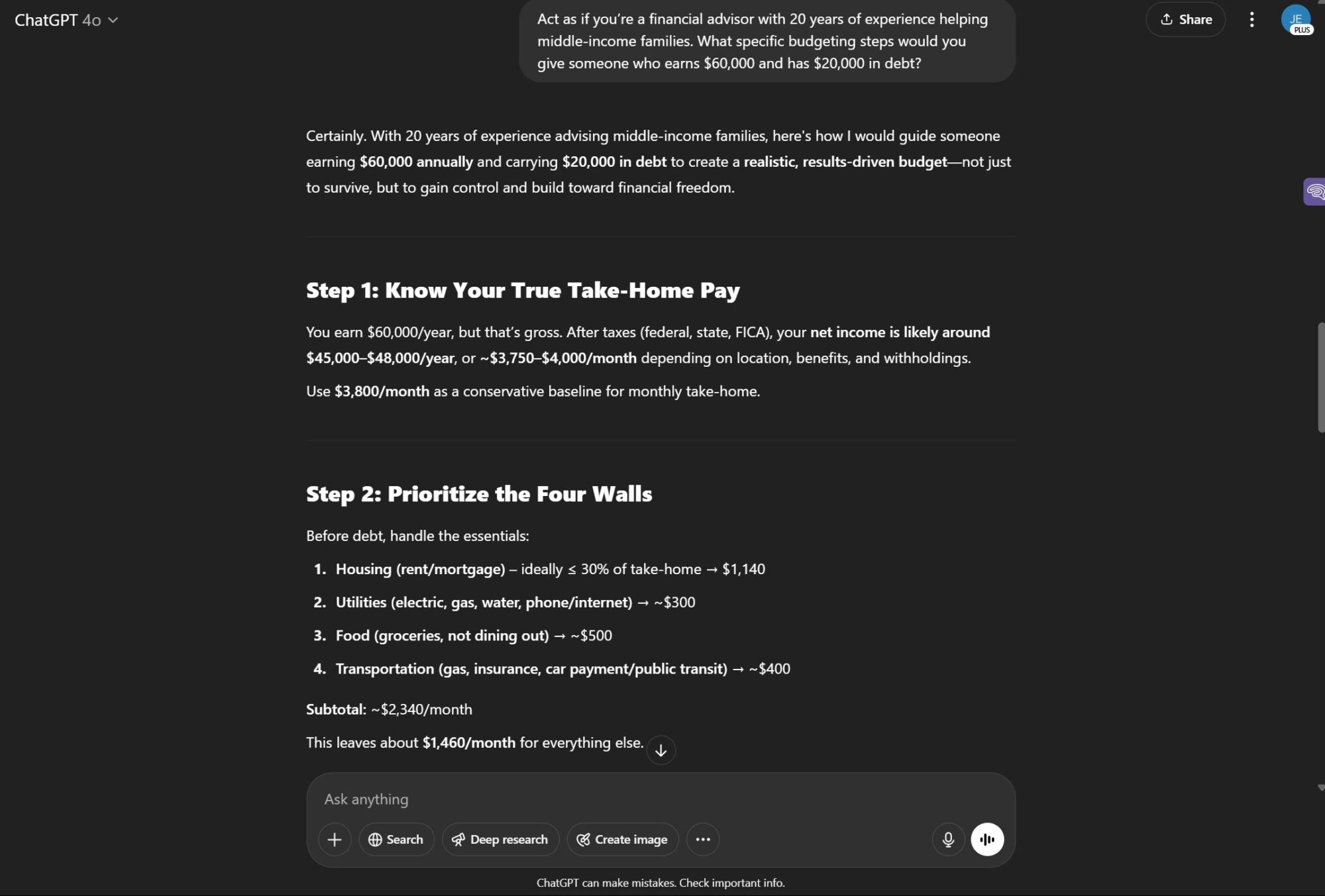

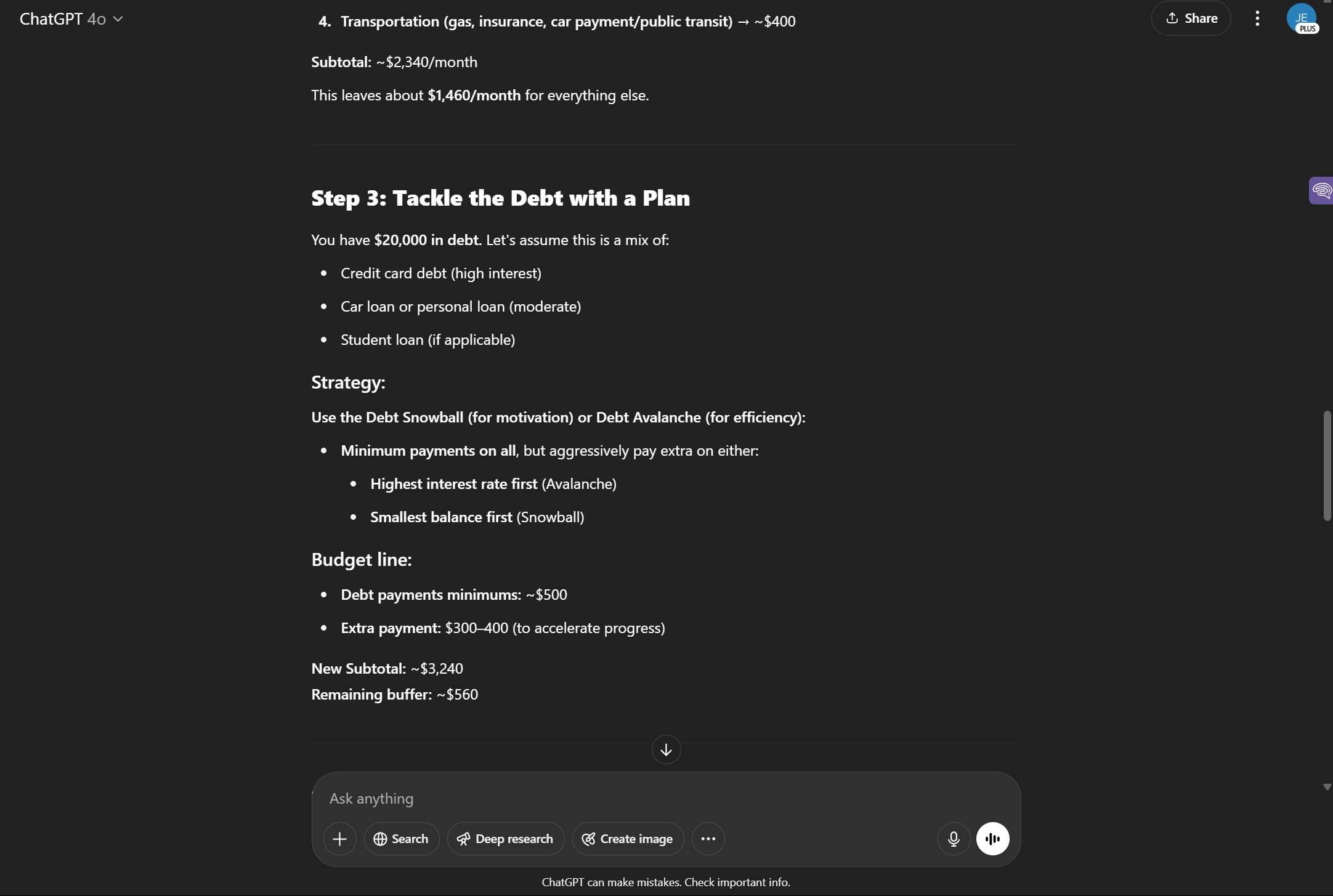

Next is an example prompt adopting the “Act as if” Model

- “Act as if you’re a financial advisor with 20 years of experience helping middle-income families. What specific budgeting steps would you give someone who earns $60,000 and has $20,000 in debt?”

Same question. But the second one generates answers that are more tailored, more realistic, and here’s the kicker—much more useful.

Why? Because the model now has a fictional identity to perform. It’s not guessing in a vacuum. It’s channeling the language, priorities, and constraints of that role.

Where “Act As If” Originated and How It Traveled to AI Prompts

Long before prompt engineers started whispering it into APIs, “act as if” was already doing work in therapy, performance coaching, and behavioral psychology.

Cognitive-behavioral therapists often encourage clients to “act as if” they were already confident, already in control. It’s a tool to help rewrite mental scripts. Actors have used it for decades to step into roles. And copywriters? They’ve long known that writing in character makes their work punchier and more persuasive.

In the world of AI, the phrase emerged as a workaround during early GPT-3 experiments. Researchers and hobbyists realized that open-ended prompts like “explain quantum mechanics” produced mushy answers. But when they said, “Act as if you’re Richard Feynman explaining quantum mechanics to a curious high schooler,” the output was suddenly lucid and engaging.

The phrase spread quickly on forums like Reddit’s r/ChatGPT and Hacker News. Developers began embedding “act as” roles directly into system prompts. Today, the technique has become one of the foundational tools of prompt engineering, even if it still flies under the radar for most casual users.

What Happens Under the Hood

To understand why this works, we need a peek at how LLMs function. These models are trained on huge swaths of text—books, websites, research papers, dialogue, code—everything. When you ask a question, the model isn’t searching a database for an answer. It’s generating a sequence of words, based on probability distributions and patterns it has seen before.

By framing your request with “act as if,” you’re essentially priming the model to pull from a different subset of its training. Instead of giving you a Wikipedia-flavored overview, it might now simulate the cadence of a seasoned journalist, or the problem-solving mindset of a software engineer.

It’s like tuning a radio—not changing the station, but improving the signal-to-noise ratio so you get something clear, focused, and resonant.

This is especially useful when:

- You need context-sensitive answers (e.g., legal, medical, technical)

- You want a tone that matches a specific situation (e.g., empathetic vs. blunt)

- You’re working with multiple constraints (e.g., a limited budget, beginner audience, short timeframe)

Common Variations That Work Just as Well

While “act as if” is the classic phrase, here are a few variations that tap the same underlying mechanics:

- “You are…”

Example: “You are a seasoned UX designer critiquing a beginner’s portfolio.” - “Imagine you’re…”

Example: “Imagine you’re a CEO preparing for a difficult board meeting.” - “Pretend you have…”

Example: “Pretend you have five years of experience as a crisis counselor.”

They all do the same thing: constrain the model’s output and reduce vagueness by anchoring it to a specific role or context.

A Few Ground Rules (So You Don’t Overdo It)

Now before you go “act as if”-ing your way through every prompt, a few things to keep in mind:

- Be specific, not just fancy. “Act as if you’re a genius” won’t help much. But “Act as if you’re a high school math teacher helping a struggling student grasp quadratic equations” will. And “Act as if you’re an AP U.S. History teacher designing a 60-minute lesson plan that engages bored students and connects civil rights history to current events.” The suggestions that follow will be immediately usable.

- Pair it with constraints. Combine roleplay with limits: word count, reading level, tone. The more tightly defined the sandbox, the better the results.

- Stay skeptical. Eerily accurate doesn’t always mean factually right. Always fact-check if you’re using the answer for anything that matters.

So Why Does This Matter?

In a world drowning in automation, precision is power. The people who get the most out of AI aren’t the loudest or the most technical. They’re the ones who understand how to frame a question, how to guide a system, how to shape output without micromanaging it.

“Act as if” works because it invites the machine to play a role, and in doing so, makes it more useful to the user.

Next time your AI feels like it’s just going through the motions, remember those three words. And don’t just ask a question. Ask it like a director setting a scene.